Python爬蟲(chóng)實(shí)戰(zhàn):采集淘寶商品信息并導(dǎo)入EXCEL表格

文章目錄

前言

- 一、解析淘寶URL組成

- 二、查看網(wǎng)頁(yè)源碼并用re庫(kù)提取信息

- 1.查看源碼2.re庫(kù)提取信息

- 三:函數(shù)填寫(xiě)

- 四:主函數(shù)填寫(xiě)

- 五:完整代碼

前言

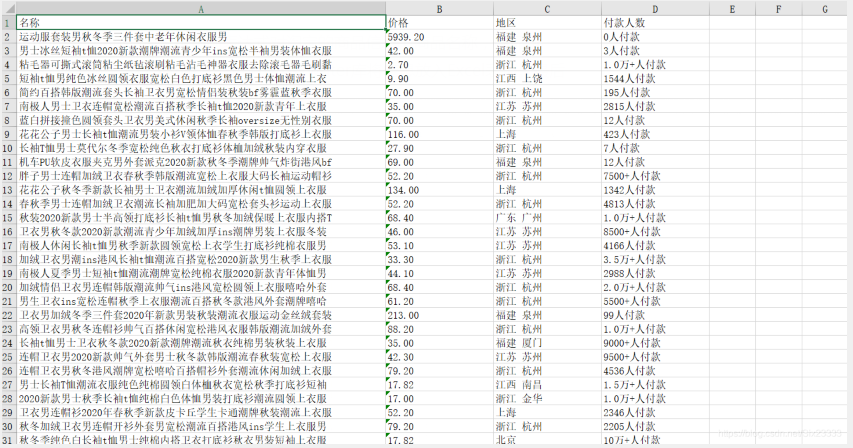

本文簡(jiǎn)單使用python的requests庫(kù)及re正則表達(dá)式對(duì)淘寶的商品信息(商品名稱,商品價(jià)格,生產(chǎn)地區(qū),以及銷售額)進(jìn)行了爬取,并最后用xlsxwriter庫(kù)將信息放入Excel表格。最后的效果圖如下:

提示:以下是本篇文章正文內(nèi)容

一、解析淘寶URL組成

1.我們的第一個(gè)需求就是要輸入商品名字返回對(duì)應(yīng)的信息

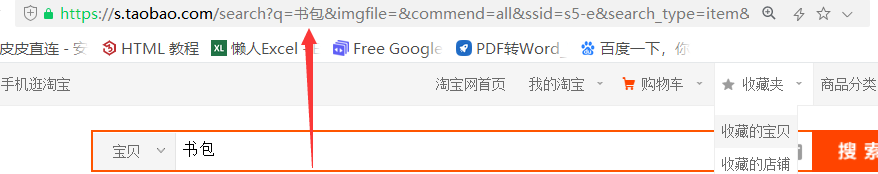

所以我們這里隨便選一個(gè)商品來(lái)觀察它的URL,這里我們選擇的是書(shū)包,打開(kāi)網(wǎng)頁(yè),可知他的URL為:

https://s.taobao.com/search?q=%E4%B9%A6%E5%8C%85&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306

可能單單從這個(gè)url里我們看不出什么,但是我們可以從圖中看出一些端倪

我們發(fā)現(xiàn)q后面的參數(shù)就是我們要獲取的物品的名字

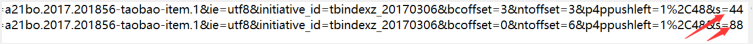

2.我們第二個(gè)需求就是根據(jù)輸入的數(shù)字來(lái)爬取商品的頁(yè)碼

所以我們來(lái)觀察一下后面幾頁(yè)URL的組成

由此我們可以得出分頁(yè)的依據(jù)是最后s的值=(44(頁(yè)數(shù)-1))

二、查看網(wǎng)頁(yè)源碼并用re庫(kù)提取信息

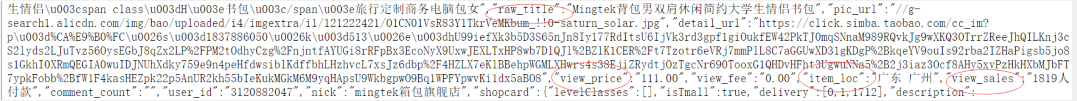

1.查看源碼

這里的幾個(gè)信息都是我們所需要的

2.re庫(kù)提取信息

- a = re.findall(r'"raw_title":"(.*?)"', html)

- b = re.findall(r'"view_price":"(.*?)"', html)

- c = re.findall(r'"item_loc":"(.*?)"', html)

- d = re.findall(r'"view_sales":"(.*?)"', html)

三:函數(shù)填寫(xiě)

這里我寫(xiě)了三個(gè)函數(shù),第一個(gè)函數(shù)來(lái)獲取html網(wǎng)頁(yè),代碼如下:

- def GetHtml(url):

- r = requests.get(url,headers =headers)

- r.raise_for_status()

- r.encoding = r.apparent_encoding

- return r

第二個(gè)用于獲取網(wǎng)頁(yè)的URL代碼如下:

- def Geturls(q, x):

- url = "https://s.taobao.com/search?q=" + q + "&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm" \

- "=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306 "

- urls = []

- urls.append(url)

- if x == 1:

- return urls

- for i in range(1, x ):

- url = "https://s.taobao.com/search?q="+ q + "&commend=all&ssid=s5-e&search_type=item" \

- "&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306" \

- "&bcoffset=3&ntoffset=3&p4ppushleft=1%2C48&s=" + str(

- i * 44)

- urls.append(url)

- return urls

第三個(gè)用于獲取我們需要的商品信息并寫(xiě)入Excel表格代碼如下:

- def GetxxintoExcel(html):

- global count#定義一個(gè)全局變量count用于后面excel表的填寫(xiě)

- a = re.findall(r'"raw_title":"(.*?)"', html)#(.*?)匹配任意字符

- b = re.findall(r'"view_price":"(.*?)"', html)

- c = re.findall(r'"item_loc":"(.*?)"', html)

- d = re.findall(r'"view_sales":"(.*?)"', html)

- x = []

- for i in range(len(a)):

- try:

- x.append((a[i],b[i],c[i],d[i]))#把獲取的信息放入新的列表中

- except IndexError:

- break

- i = 0

- for i in range(len(x)):

- worksheet.write(count + i + 1, 0, x[i][0])#worksheet.write方法用于寫(xiě)入數(shù)據(jù),第一個(gè)數(shù)字是行位置,第二個(gè)數(shù)字是列,第三個(gè)是寫(xiě)入的數(shù)據(jù)信息。

- worksheet.write(count + i + 1, 1, x[i][1])

- worksheet.write(count + i + 1, 2, x[i][2])

- worksheet.write(count + i + 1, 3, x[i][3])

- count = count +len(x) #下次寫(xiě)入的行數(shù)是這次的長(zhǎng)度+1

- return print("已完成")

四:主函數(shù)填寫(xiě)

- if __name__ == "__main__":

- count = 0

- headers = {

- "user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36"

- ,"cookie":""#cookie 是每個(gè)人獨(dú)有的,因?yàn)榉磁罊C(jī)制的緣故,爬取太快可能到后面要重新刷新一下自己的Cookie。

- }

- q = input("輸入貨物")

- x = int(input("你想爬取幾頁(yè)"))

- urls = Geturls(q,x)

- workbook = xlsxwriter.Workbook(q+".xlsx")

- worksheet = workbook.add_worksheet()

- worksheet.set_column('A:A', 70)

- worksheet.set_column('B:B', 20)

- worksheet.set_column('C:C', 20)

- worksheet.set_column('D:D', 20)

- worksheet.write('A1', '名稱')

- worksheet.write('B1', '價(jià)格')

- worksheet.write('C1', '地區(qū)')

- worksheet.write('D1', '付款人數(shù)')

- for url in urls:

- html = GetHtml(url)

- s = GetxxintoExcel(html.text)

- time.sleep(5)

- workbook.close()#在程序結(jié)束之前不要打開(kāi)excel,excel表在當(dāng)前目錄下

五:完整代碼

- import re

- import requests

- import xlsxwriter

- import time

- def GetxxintoExcel(html):

- global count

- a = re.findall(r'"raw_title":"(.*?)"', html)

- b = re.findall(r'"view_price":"(.*?)"', html)

- c = re.findall(r'"item_loc":"(.*?)"', html)

- d = re.findall(r'"view_sales":"(.*?)"', html)

- x = []

- for i in range(len(a)):

- try:

- x.append((a[i],b[i],c[i],d[i]))

- except IndexError:

- break

- i = 0

- for i in range(len(x)):

- worksheet.write(count + i + 1, 0, x[i][0])

- worksheet.write(count + i + 1, 1, x[i][1])

- worksheet.write(count + i + 1, 2, x[i][2])

- worksheet.write(count + i + 1, 3, x[i][3])

- count = count +len(x)

- return print("已完成")

- def Geturls(q, x):

- url = "https://s.taobao.com/search?q=" + q + "&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm" \

- "=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306 "

- urls = []

- urls.append(url)

- if x == 1:

- return urls

- for i in range(1, x ):

- url = "https://s.taobao.com/search?q="+ q + "&commend=all&ssid=s5-e&search_type=item" \

- "&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306" \

- "&bcoffset=3&ntoffset=3&p4ppushleft=1%2C48&s=" + str(

- i * 44)

- urls.append(url)

- return urls

- def GetHtml(url):

- r = requests.get(url,headers =headers)

- r.raise_for_status()

- r.encoding = r.apparent_encoding

- return r

- if __name__ == "__main__":

- count = 0

- headers = {

- "user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36"

- ,"cookie":""

- }

- q = input("輸入貨物")

- x = int(input("你想爬取幾頁(yè)"))

- urls = Geturls(q,x)

- workbook = xlsxwriter.Workbook(q+".xlsx")

- worksheet = workbook.add_worksheet()

- worksheet.set_column('A:A', 70)

- worksheet.set_column('B:B', 20)

- worksheet.set_column('C:C', 20)

- worksheet.set_column('D:D', 20)

- worksheet.write('A1', '名稱')

- worksheet.write('B1', '價(jià)格')

- worksheet.write('C1', '地區(qū)')

- worksheet.write('D1', '付款人數(shù)')

- xx = []

- for url in urls:

- html = GetHtml(url)

- s = GetxxintoExcel(html.text)

- time.sleep(5)

- workbook.close()

【編輯推薦】