通過(guò) Docker-Compose 快速部署 Hive 詳細(xì)教程

一、概述

其實(shí)通過(guò) docker-compose 部署 hive 是在繼上篇文章 Hadoop 部署的基礎(chǔ)之上疊加的,Hive 做為最常用的數(shù)倉(cāng)服務(wù),所以是有必要進(jìn)行集成的,感興趣的小伙伴請(qǐng)認(rèn)真閱讀我以下內(nèi)容,通過(guò) docker-compose 部署的服務(wù)主要是用最少的資源和時(shí)間成本快速部署服務(wù),方便小伙伴學(xué)習(xí)、測(cè)試、驗(yàn)證功能等等~

二、前期準(zhǔn)備

1)部署 docker

# 安裝yum-config-manager配置工具

yum -y install yum-utils

# 建議使用阿里云yum源:(推薦)

#yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安裝docker-ce版本

yum install -y docker-ce

# 啟動(dòng)并開(kāi)機(jī)啟動(dòng)

systemctl enable --now docker

docker --version

2)部署 docker-compose

curl -SL https://github.com/docker/compose/releases/download/v2.16.0/docker-compose-linux-x86_64 -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

docker-compose --version

三、創(chuàng)建網(wǎng)絡(luò)

# 創(chuàng)建,注意不能使用hadoop-network,要不然啟動(dòng)hs2服務(wù)的時(shí)候會(huì)有問(wèn)題!!!

docker network create hadoop-network

# 查看

docker network ls

四、MySQL 部署

1)mysql 鏡像

docker pull mysql:5.7

docker tag mysql:5.7 registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/mysql:5.7

2)配置

mkdir -p conf/ data/db/

cat >conf/my.cnf<<EOF

[mysqld]

character-set-server=utf8

log-bin=mysql-bin

server-id=1

pid-file = /var/run/mysqld/mysqld.pid

socket = /var/run/mysqld/mysqld.sock

datadir = /var/lib/mysql

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION

symbolic-links=0

secure_file_priv =

wait_timeout=120

interactive_timeout=120

default-time_zone = '+8:00'

skip-external-locking

skip-name-resolve

open_files_limit = 10240

max_connections = 1000

max_connect_errors = 6000

table_open_cache = 800

max_allowed_packet = 40m

sort_buffer_size = 2M

join_buffer_size = 1M

thread_cache_size = 32

query_cache_size = 64M

transaction_isolation = READ-COMMITTED

tmp_table_size = 128M

max_heap_table_size = 128M

log-bin = mysql-bin

sync-binlog = 1

binlog_format = ROW

binlog_cache_size = 1M

key_buffer_size = 128M

read_buffer_size = 2M

read_rnd_buffer_size = 4M

bulk_insert_buffer_size = 64M

lower_case_table_names = 1

explicit_defaults_for_timestamp=true

skip_name_resolve = ON

event_scheduler = ON

log_bin_trust_function_creators = 1

innodb_buffer_pool_size = 512M

innodb_flush_log_at_trx_commit = 1

innodb_file_per_table = 1

innodb_log_buffer_size = 4M

innodb_log_file_size = 256M

innodb_max_dirty_pages_pct = 90

innodb_read_io_threads = 4

innodb_write_io_threads = 4

EOF

3)編排

version: '3'

services:

db:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/mysql:5.7 #mysql版本

container_name: mysql

hostname: mysql

volumes:

- ./data/db:/var/lib/mysql

- ./conf/my.cnf:/etc/mysql/mysql.conf.d/mysqld.cnf

restart: always

ports:

- 13306:3306

networks:

- hadoop_network

environment:

MYSQL_ROOT_PASSWORD: 123456 #訪問(wèn)密碼

secure_file_priv:

healthcheck:

test: ["CMD-SHELL", "curl -I localhost:3306 || exit 1"]

interval: 10s

timeout: 5s

retries: 3

# 連接外部網(wǎng)絡(luò)

networks:

hadoop_network:

external: true

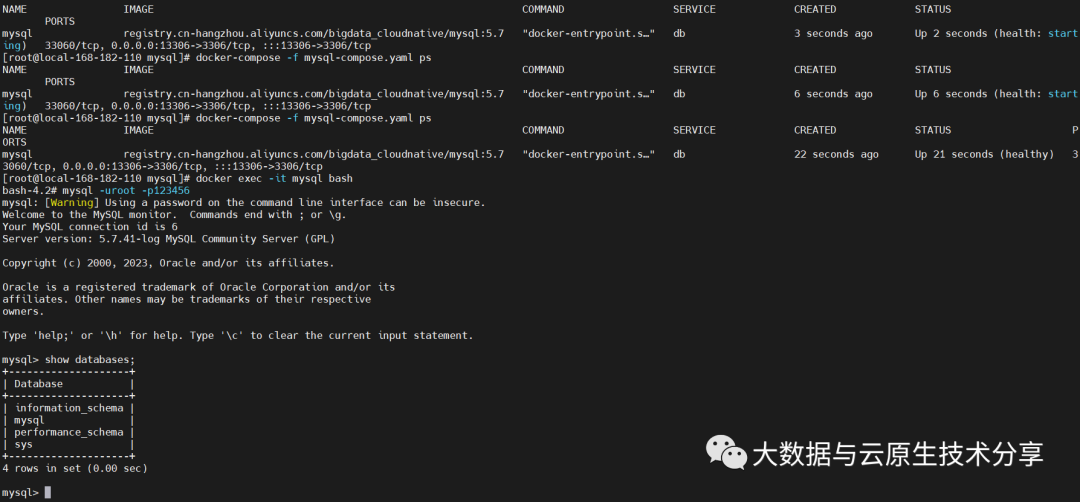

4)部署 mysql

docker-compose -f mysql-compose.yaml up -d

docker-compose -f mysql-compose.yaml ps

# 登錄容器

mysql -uroot -p123456

四、Hive 部署

1)下載 hive

下載地址:http://archive.apache.org/dist/hive

# 下載

wget http://archive.apache.org/dist/hive/hive-3.1.3/apache-hive-3.1.3-bin.tar.gz

# 解壓

tar -zxvf apache-hive-3.1.3-bin.tar.gz

2)配置

images/hive-config/hive-site.xml

<?xml versinotallow="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 配置hdfs存儲(chǔ)目錄 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_remote/warehouse</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>false</value>

</property>

<!-- 所連接的 MySQL 數(shù)據(jù)庫(kù)的地址,hive_local是數(shù)據(jù)庫(kù),程序會(huì)自動(dòng)創(chuàng)建,自定義就行 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://mysql:3306/hive_metastore?createDatabaseIfNotExist=true&useSSL=false&serverTimeznotallow=Asia/Shanghai</value>

</property>

<!-- MySQL 驅(qū)動(dòng) -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<!--<value>com.mysql.cj.jdbc.Driver</value>-->

<value>com.mysql.jdbc.Driver</value>

</property>

<!-- mysql連接用戶 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- mysql連接密碼 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<!--元數(shù)據(jù)是否校驗(yàn)-->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>system:user.name</name>

<value>root</value>

<description>user name</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hive-metastore:9083</value>

</property>

<!-- host -->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>0.0.0.0</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

</property>

<!-- hs2端口 默認(rèn)是10000-->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property>

</configuration>

3)啟動(dòng)腳本

#!/usr/bin/env sh

wait_for() {

echo Waiting for $1 to listen on $2...

while ! nc -z $1 $2; do echo waiting...; sleep 1s; done

}

start_hdfs_namenode() {

if [ ! -f /tmp/namenode-formated ];then

${HADOOP_HOME}/bin/hdfs namenode -format >/tmp/namenode-formated

fi

${HADOOP_HOME}/bin/hdfs --loglevel INFO --daemon start namenode

tail -f ${HADOOP_HOME}/logs/*namenode*.log

}

start_hdfs_datanode() {

wait_for $1 $2

${HADOOP_HOME}/bin/hdfs --loglevel INFO --daemon start datanode

tail -f ${HADOOP_HOME}/logs/*datanode*.log

}

start_yarn_resourcemanager() {

${HADOOP_HOME}/bin/yarn --loglevel INFO --daemon start resourcemanager

tail -f ${HADOOP_HOME}/logs/*resourcemanager*.log

}

start_yarn_nodemanager() {

wait_for $1 $2

${HADOOP_HOME}/bin/yarn --loglevel INFO --daemon start nodemanager

tail -f ${HADOOP_HOME}/logs/*nodemanager*.log

}

start_yarn_proxyserver() {

wait_for $1 $2

${HADOOP_HOME}/bin/yarn --loglevel INFO --daemon start proxyserver

tail -f ${HADOOP_HOME}/logs/*proxyserver*.log

}

start_mr_historyserver() {

wait_for $1 $2

${HADOOP_HOME}/bin/mapred --loglevel INFO --daemon start historyserver

tail -f ${HADOOP_HOME}/logs/*historyserver*.log

}

start_hive_metastore() {

if [ ! -f ${HIVE_HOME}/formated ];then

schematool -initSchema -dbType mysql --verbose > ${HIVE_HOME}/formated

fi

$HIVE_HOME/bin/hive --service metastore

}

start_hive_hiveserver2() {

$HIVE_HOME/bin/hive --service hiveserver2

}

case $1 in

hadoop-hdfs-nn)

start_hdfs_namenode

;;

hadoop-hdfs-dn)

start_hdfs_datanode $2 $3

;;

hadoop-yarn-rm)

start_yarn_resourcemanager

;;

hadoop-yarn-nm)

start_yarn_nodemanager $2 $3

;;

hadoop-yarn-proxyserver)

start_yarn_proxyserver $2 $3

;;

hadoop-mr-historyserver)

start_mr_historyserver $2 $3

;;

hive-metastore)

start_hive_metastore $2 $3

;;

hive-hiveserver2)

start_hive_hiveserver2 $2 $3

;;

*)

echo "請(qǐng)輸入正確的服務(wù)啟動(dòng)命令~"

;;

esac

4)構(gòu)建鏡像 Dockerfile

FROM registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop:v1

COPY hive-config/* ${HIVE_HOME}/conf/

COPY bootstrap.sh /opt/apache/

COPY mysql-connector-java-5.1.49/mysql-connector-java-5.1.49-bin.jar ${HIVE_HOME}/lib/

RUN sudo mkdir -p /home/hadoop/ && sudo chown -R hadoop:hadoop /home/hadoop/

#RUN yum -y install which

開(kāi)始構(gòu)建鏡像

# 構(gòu)建鏡像

docker build -t registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1 . --no-cache

# 推送鏡像(可選)

docker push registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

### 參數(shù)解釋

# -t:指定鏡像名稱

# . :當(dāng)前目錄Dockerfile

# -f:指定Dockerfile路徑

# --no-cache:不緩存

5)編排

version: '3'

services:

hadoop-hdfs-nn:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-hdfs-nn

hostname: hadoop-hdfs-nn

restart: always

privileged: true

env_file:

- .env

ports:

- "30070:${HADOOP_HDFS_NN_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-hdfs-nn"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_HDFS_NN_PORT} || exit 1"]

interval: 20s

timeout: 20s

retries: 3

hadoop-hdfs-dn-0:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-hdfs-dn-0

hostname: hadoop-hdfs-dn-0

restart: always

depends_on:

- hadoop-hdfs-nn

env_file:

- .env

ports:

- "30864:${HADOOP_HDFS_DN_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-hdfs-dn hadoop-hdfs-nn ${HADOOP_HDFS_NN_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_HDFS_DN_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-hdfs-dn-1:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-hdfs-dn-1

hostname: hadoop-hdfs-dn-1

restart: always

depends_on:

- hadoop-hdfs-nn

env_file:

- .env

ports:

- "30865:${HADOOP_HDFS_DN_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-hdfs-dn hadoop-hdfs-nn ${HADOOP_HDFS_NN_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_HDFS_DN_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-hdfs-dn-2:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-hdfs-dn-2

hostname: hadoop-hdfs-dn-2

restart: always

depends_on:

- hadoop-hdfs-nn

env_file:

- .env

ports:

- "30866:${HADOOP_HDFS_DN_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-hdfs-dn hadoop-hdfs-nn ${HADOOP_HDFS_NN_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_HDFS_DN_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-yarn-rm:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-yarn-rm

hostname: hadoop-yarn-rm

restart: always

env_file:

- .env

ports:

- "30888:${HADOOP_YARN_RM_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-yarn-rm"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${HADOOP_YARN_RM_PORT} || exit 1"]

interval: 20s

timeout: 20s

retries: 3

hadoop-yarn-nm-0:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-yarn-nm-0

hostname: hadoop-yarn-nm-0

restart: always

depends_on:

- hadoop-yarn-rm

env_file:

- .env

ports:

- "30042:${HADOOP_YARN_NM_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-yarn-nm hadoop-yarn-rm ${HADOOP_YARN_RM_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_YARN_NM_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-yarn-nm-1:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-yarn-nm-1

hostname: hadoop-yarn-nm-1

restart: always

depends_on:

- hadoop-yarn-rm

env_file:

- .env

ports:

- "30043:${HADOOP_YARN_NM_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-yarn-nm hadoop-yarn-rm ${HADOOP_YARN_RM_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_YARN_NM_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-yarn-nm-2:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-yarn-nm-2

hostname: hadoop-yarn-nm-2

restart: always

depends_on:

- hadoop-yarn-rm

env_file:

- .env

ports:

- "30044:${HADOOP_YARN_NM_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-yarn-nm hadoop-yarn-rm ${HADOOP_YARN_RM_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "curl --fail http://localhost:${HADOOP_YARN_NM_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-yarn-proxyserver:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-yarn-proxyserver

hostname: hadoop-yarn-proxyserver

restart: always

depends_on:

- hadoop-yarn-rm

env_file:

- .env

ports:

- "30911:${HADOOP_YARN_PROXYSERVER_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-yarn-proxyserver hadoop-yarn-rm ${HADOOP_YARN_RM_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${HADOOP_YARN_PROXYSERVER_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hadoop-mr-historyserver:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hadoop-mr-historyserver

hostname: hadoop-mr-historyserver

restart: always

depends_on:

- hadoop-yarn-rm

env_file:

- .env

ports:

- "31988:${HADOOP_MR_HISTORYSERVER_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hadoop-mr-historyserver hadoop-yarn-rm ${HADOOP_YARN_RM_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${HADOOP_MR_HISTORYSERVER_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 3

hive-metastore:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hive-metastore

hostname: hive-metastore

restart: always

depends_on:

- hadoop-hdfs-dn-2

env_file:

- .env

ports:

- "30983:${HIVE_METASTORE_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hive-metastore hadoop-hdfs-dn-2 ${HADOOP_HDFS_DN_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${HIVE_METASTORE_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 5

hive-hiveserver2:

image: registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/hadoop_hive:v1

user: "hadoop:hadoop"

container_name: hive-hiveserver2

hostname: hive-hiveserver2

restart: always

depends_on:

- hive-metastore

env_file:

- .env

ports:

- "31000:${HIVE_HIVESERVER2_PORT}"

command: ["sh","-c","/opt/apache/bootstrap.sh hive-hiveserver2 hive-metastore ${HIVE_METASTORE_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${HIVE_HIVESERVER2_PORT} || exit 1"]

interval: 30s

timeout: 30s

retries: 5

# 連接外部網(wǎng)絡(luò)

networks:

hadoop-network:

external: true

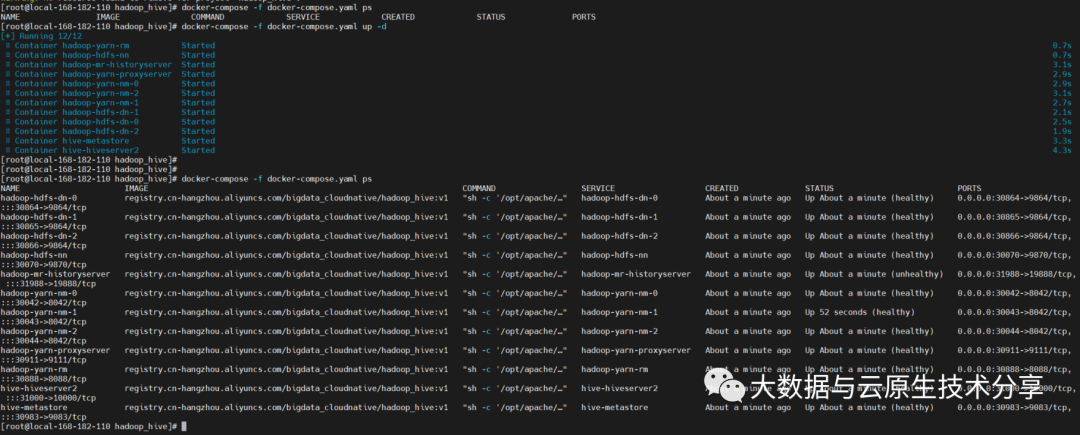

6)開(kāi)始部署

docker-compose -f docker-compose.yaml up -d

# 查看

docker-compose -f docker-compose.yaml ps

簡(jiǎn)單測(cè)試驗(yàn)證

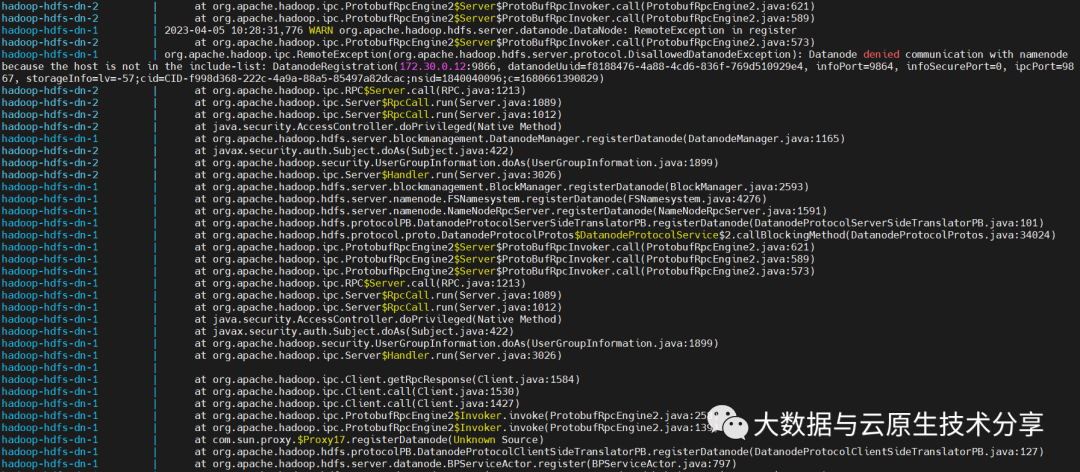

【問(wèn)題】如果出現(xiàn)以下類似的錯(cuò)誤,是因?yàn)槎啻螁?dòng),之前的數(shù)據(jù)還在,但是datanode的IP是已經(jīng)變了的(宿主機(jī)部署就不會(huì)有這樣的問(wèn)題,因?yàn)樗拗鳈C(jī)的IP是固定的),所以需要刷新節(jié)點(diǎn),當(dāng)然也可清理之前的舊數(shù)據(jù),不推薦清理舊數(shù)據(jù),推薦使用刷新節(jié)點(diǎn)的方式(如果有對(duì)外掛載的情況下,像我這里沒(méi)有對(duì)外掛載,是因?yàn)橹芭f容器還在,下面有幾種解決方案):

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.protocol.DisallowedDatanodeException): Datanode denied communication with namenode because the host is not in the include-list: DatanodeRegistration(172.30.0.12:9866, datanodeUuid=f8188476-4a88-4cd6-836f-769d510929e4, infoPort=9864, infoSecurePort=0, ipcPort=9867, storageInfo=lv=-57;cid=CID-f998d368-222c-4a9a-88a5-85497a82dcac;nsid=1840040096;c=1680661390829)

【解決方案】

- 刪除舊容器重啟啟動(dòng)

# 清理舊容器

docker rm `docker ps -a|grep 'Exited'|awk '{print $1}'`

# 重啟啟動(dòng)服務(wù)

docker-compose -f docker-compose.yaml up -d

# 查看

docker-compose -f docker-compose.yaml ps

- 登錄 namenode 刷新 datanode

docker exec -it hadoop-hdfs-nn hdfs dfsadmin -refreshNodes

- 登錄 任意節(jié)點(diǎn)刷新 datanode

# 這里以 hadoop-hdfs-dn-0 為例

docker exec -it hadoop-hdfs-dn-0 hdfs dfsadmin -fs hdfs://hadoop-hdfs-nn:9000 -refreshNodes